thigh hip age abdomen knee chest

-0.11301249 -0.10648937 -0.05853538 -0.02173587 0.02345904 0.05838830

biceps ankle weight forearm wrist neck

0.07441696 0.07920867 0.11228791 0.16968040 0.28967468 0.29147610

height

1.00000000 Model Selection

As we gather

Sit where you’d like BUT challenge yourself to work with new people.

Introduce yourself and check in with each other as humans.

Come up with a team name that reflects the majors represented in your group without including the names of those majors (eg: “data rocks!” instead of “statistics geology”).

You will need the

reshape2package today. Install this if it isn’t already.- Open the Rmd for PART 1. Do NOT open Part 2!

Announcements

- Thursday at 11:15am - MSCS Coffee Break

- Smail Gallery

- HW2 change:

- On Slack, I posted this on Tuesday

Exercise 3a, Please change the question so that the bigger model contains all predictors except Cloud9am.

Temp3pm ~ Temp9am + Location + Pressure9am + WindSpeed9am + Humidity9am

Notes - Model Selection

CONTEXT

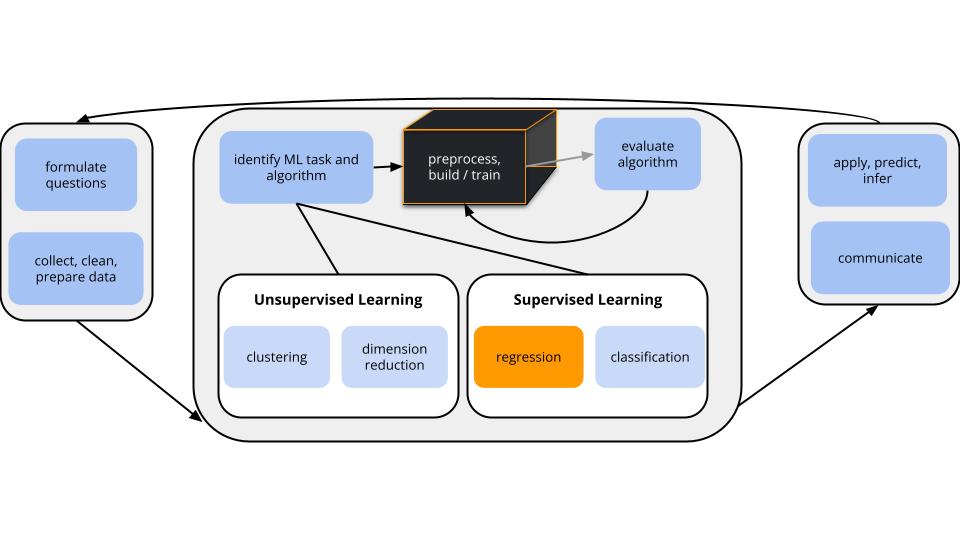

world = supervised learning

We want to model some output variable \(y\) using a set of potential predictors \((x_1, x_2, ..., x_p)\).task = regression

\(y\) is quantitativemodel = linear regression

We’ll assume that the relationship between \(y\) and (\(x_1, x_2, ..., x_p\)) can be represented by\[y = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + ... + \beta_p x_p + \varepsilon\]

Notes - Model Selection

In model building, the decision of which predictors to use depends upon our goal.

Inferential models

- Goal: Explore & test hypotheses about a specific relationship.

- Predictors: Defined by the goal.

- Example: An economist wants to understand how salaries (\(y\)) vary by age (\(x_1\)) while controlling for education level (\(x_2\)).

Predictive models

- Goal: Produce the “best” possible predictions of \(y\).

- Predictors: Any combination of predictors that help us meet this goal.

- Example: A mapping app wants to provide users with quality estimates of arrival time (\(y\)) utilizing any useful predictors (eg: time of day, distance, route, speed limit, weather, day of week, traffic radar…)

Notes - Model Selection Goals

Model selection algorithms can help us build a predictive model of \(y\) using a set of potential predictors (\(x_1, x_2, ..., x_p\)).

There are 3 general approaches to this task:

Variable selection (today)

Identify a subset of predictors to use in our model of \(y\).Shrinkage / regularization (next class)

Shrink / regularize the coefficients of all predictors toward or to 0.Dimension reduction (later in the semester)

Combine the predictors into a smaller set of new predictors.

Small Group Activity - Part 1

Go to https://bcheggeseth.github.io/253_spring_2024/model-selection.html

Open Part 1 Rmd.

Go to > Exercises - Part 1.

Your group is going to design a variable selection algorithm to choose which of the predictors to use to predict height of humans (focus is on a predictive model).

- 15 mins: come up with one algorithm, document it, and try it

- 5 mins: try another group’s algorithm

Notes - Variable Selection

Open Part 2 Rmd to take notes.

Let’s consider three existing variable selection algorithms.

Heads up:

- these algorithms are important to building intuition for the questions and challenges in model selection, BUT have major drawbacks.

Notes - Variable Selection

EXAMPLE 1: Best Subset Selection Algorithm

- Build all \(2^p\) possible models that use any combination of the available predictors \((x_1, x_2,..., x_p)\).

- Identify the best model with respect to some chosen metric (eg: CV MAE) and context.

Suppose we used this algorithm for our height model with 12 possible predictors. What’s the main drawback?

Notes - Variable Selection

EXAMPLE 2: Backward Stepwise Selection Algorithm

- Build a model with all \(p\) possible predictors, \((x_1, x_2,..., x_p)\).

- Repeat the following until only 1 predictor remains in the model:

- Remove the 1 predictor with the biggest p-value.

- Build a model with the remaining predictors.

- You now have \(p\) competing models: one with all \(p\) predictors, one with \(p-1\) predictors, …, and one with 1 predictor. Identify the “best” model with respect to some metric (eg: CV MAE) and context.

Notes - Variable Selection

EXAMPLE 3: Backward Stepwise Selection Step-by-Step Results

Below is the complete model sequence along with 10-fold CV MAE for each model (using set.seed(253)).

| pred | CV MAE | predictor list |

|---|---|---|

| 12 | 5.728 | weight, hip, forearm, thigh, chest, abdomen, age, ankle, wrist, knee, neck, biceps |

| 11 | 5.523 | weight, hip, forearm, thigh, chest, abdomen, age, ankle, wrist, knee, neck |

| 10 | 5.413 | weight, hip, forearm, thigh, chest, abdomen, age, ankle, wrist, knee |

| 9 | 5.368 | weight, hip, forearm, thigh, chest, abdomen, age, ankle, wrist |

| 8 | 5.047 | weight, hip, forearm, thigh, chest, abdomen, age, ankle |

| 7 | 5.013 | weight, hip, forearm, thigh, chest, abdomen, age |

| 6 | 4.684 | weight, hip, forearm, thigh, chest, abdomen |

| 5 | 4.460 | weight, hip, forearm, thigh, chest |

| 4 | 4.385 | weight, hip, forearm, thigh |

| 3 | 4.091 | weight, hip, forearm |

| 2 | 3.732 | weight, hip |

| 1 | 3.658 | weight |

- REVIEW: Interpret the CV MAE for the model of

heightbyweightalone.

- Is this algorithm more or less computationally expensive than the best subset algorithm?

- The predictors

wristandneck, in that order, are the most strongly correlated withheight. Where do these appear in the backward sequence and what does this mean?

- We deleted predictors one at a time. Why is this better than deleting a collection of multiple predictors at the same time (eg: kicking out all predictors with p-value > 0.1)?

In-Class Activity - Part 2

Go back to https://bcheggeseth.github.io/253_spring_2024/model-selection.html

Go to Exercises > Part 2

- Become familiar with the new code structures (recipes and workflows)

- Ask me questions as I move around the room.

After Class

Finishing the activity

- If you didn’t finish the activity, no problem! Be sure to complete the activity outside of class, review the solutions in the course website, and ask any questions on Slack or in office hours.

Continue to check in on Slack. I’ll be posting announcements there from now on.

Upcoming due dates

Today by 11:59pm: Homework 2

Tuesday, 10 minutes before your section: Checkpoint 5.

Tuesday 2/13: Homework 3

- Next Tuesday we’ll cover more of the concepts covered on this HW.

- Using Slack, invite others to work on homework with you.

- Pass/fail, limited revisions,

- Deadline is so we can get timely feedback to you, let me know if you cannot make deadline (let me know what day you’ll finish)

- Next Tuesday we’ll cover more of the concepts covered on this HW.