Topic 12 Data Import

Learning Goals

- Develop comfort in finding an existing data set to import into R

- Develop comfort in importing data of a variety of file types into R

- Understand and implement the data cleaning process to make values consistent

- Understand the implications of different ways of dealing with missing values with

replace_naanddrop_na

Create a new Rmd file (save it as 12-Data Import.Rmd). Put this file in a folder Assignment_08 in your COMP_STAT_112 folder.

- Make sure to add alt text to visualizations using fig.alt!

Finding, Importing, and Cleaning Data

Additional resources and readings:

1. Data Import Cheat Sheet

2. readr documentation

3. Data import from Wickham and Grolemund

4. Missing data from Wickham and Grolemund

5. Data intake from Baumer, Kaplan, and Horton

6. Using the import wizard from Prof. Lendway

In practice, data science is not as glamorous as building fancy classifiers and creating visualizations all the time. Data scientists spend 80% of their time acquiring and cleaning data. While the skill of data acquisition is best learned through experience, this section of the course will outline the most common approaches to acquiring data.

When importing and cleaning a dataset, take careful notes in your R Markdown. Explain where you found the dataset (the source). Record the steps you took to clean and import the data in case somebody else needs to replicate your analysis. You should also make sure to cite and credit the creator of the dataset.

Finding Existing Data Sets

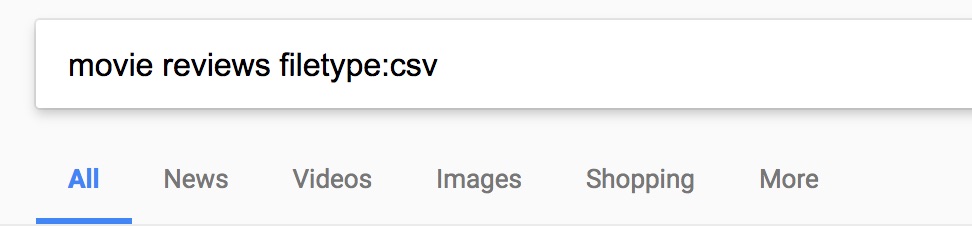

Figure 12.1: An example Google search.

The easiest way to get data is by finding an existing dataset that has been created by somebody else. Search engines such as Google can be excellent tools, especially when using file type filters. For example, if you are looking for a dataset about movie reviews, you might search for “movie reviews filetype:csv”. You could also try searching for other common filetypes that are compatible with R, such as .tsv, .xls, .xlsx, or .rds.

Another good resource for datasets are compendiums of datasets such as the excellent and continuously-evolving awesome-public-datasets GitHub repo, Kaggle datasets or the data.world website website. You can find links to other similar compendiums at the end of the awesome-public-datasets page.

Saving Datasets Locally

Once you’ve found a dataset you are interested in, you need to download the file and save it to a location on your computer.

The best location to put a dataset is within a folder that is dedicated to the project or assignment. For example, you’ve created a folder for Assignment_08 and any datasets you use for this assignment should be saved in that folder.

Quick Note: One key idea when you are working with datasets on your local machine is that you need to:

- know where the files are located

- know how to tell the computer program where the files are located (a file path)

There are two common ways we refer to file locations: absolute file path and relative file path.

Absolute file path

- An absolute file path describes the location of a file from the root directory or folder, typically the user directory.

- On a Mac,

~refers to the user root directory. - On a Windows, the root directory is typically

C:\

- On a Mac,

- Example: A file called

data.csvis located in theAssignment_08folder inComp_Stat_112folder on the Desktop- On a Mac, the absolute file path is

~/Desktop/Comp_Stat_112/Assignment_08/data.csv - On a Windows, the absolute file path is

C:/Desktop/Comp_Stat_112/Assignment_08/data.csv - Windows Note: Switch from backslash to forward slashes in R or you need to use

\\instead of\

- On a Mac, the absolute file path is

Relative file path

- A relative file path describes the location of a file from the current working directory (or in the case of an Rmd, the location of the Rmd file).

- When working within an Rmd file, it will first look for files relative to the location of the Rmd file. Therefore, it is good practice to put the data file in the same folder as the Rmd file you are working on.

- If you are working in the Console, you can change the working directory (Session > Set Working Directory).

- Example: A file called

data.csvis located in adatafolder withinComp_Stat_112folder on the Desktop- If the working directory is

~/Desktop/Comp_Stat_112/Assignment_08/, the relative path is../data/data.csv. The..refers to the parent directory (go up one level to the folder containingAssignment_08). - If the working directory is

~/Desktop/Comp_Stat_112/, the relative path isdata/data.csv. - If the working directory is

~/Desktop/Comp_Stat_112/data, the relative path isdata.csv.

- If the working directory is

Loading Datasets

Once you have a dataset, it’s time to load it into R. Don’t be frustrated if this step takes some time.

The table below lists some common data import functions and when you would use them.

| Function | Use when |

|---|---|

read_csv() |

data are saved in .csv (comma delimited or comma separated values) format - you can save Excel files and Google Sheets in this format |

read_delim() |

data are saved in other delimited formats (tab, space, etc.) |

read_sheet() |

data are in a Google Sheet |

st_read() |

reading in a shapefile |

A few tips:

- When reading in data from a file, one tip is to initially use the Import Wizard to help write the code and file path. DO NOT use it to import the data as you will need the code to read in the data in order to knit your document. Check out a video tutorial on the Import Wizard

- The import functions

read_csv,read_csv2, andread_tsvfrom thereadrpackage are faster than their counterpartsread.csv,read.csv2, andread.tsvfrom thebasepackage for large files. They also have more flexible parsers (e.g., for dates, times, percentages). We recommend you use these functions instead of thebasefunctions likeread.csv. The packagefreadhas other import functions and is also faster for large datasets. For smaller data sets (say 1MB or less), there won’t be that much difference in time for the three different packages. read_csv2is for semi-colon delimited files, whereasread_csvis for comma delimited files.- The

readrfunctions automatically guess the type of data in each column (e.g., character, double, integer). You will often see a message just after the import telling you what it chose for each column. If you think there is an issue, you can use the functionproblems()to detect problems, and/or specify how the columns should be imported. See the section on “column specification” in the Data Import Cheat Sheet for more info. - If you have trouble importing a dataset, try to first import it into a different data such as Google Sheets or Excel tool and then export it as a TSV or CSV before reading it into

R. - For really messy data, OpenRefine is complicated but powerful (YouTube demo).

- When you are importing a large file, you might want to first try importing a subset of the data. For example, if you want to take the first 17 rows only, you can write

read_csv("file.csv",n_max=17) - Similarly, you might want to skip the first \(n\) lines of the file when importing, select only certain columns to read in, or choose a random subset of the rows. See the cheat sheet for instructions on these tasks or just google!

Checking the Imported Datasets

After reading in new data, it is ALWAYS a good idea to do some quick checks of the data. Here are two first steps that are especially useful:

Open the data in the spreadsheet-like viewer with

View(dataset_name)and take a look at it. Sort it by different variables by clicking on the arrows next to the variable name. Make sure there isn’t anything unexpected.Do a quick summary of the data. The code below is one way to do this. For quantitative variables, it provides summary statistics and will let you know if there are missing values. For factors (they need to be factors, not just character variables - the

mutate()changes them to factors), it shows you counts for the top categories and tells you if there are any missing values.

dataset_name %>%

mutate(across(where(is.character), as.factor)) %>%

summary()Cleaning Datasets

Cleaning Categorical Variables

First we want to make sure the factors are “clean”, meaning consistent values in the correct format. For example, true and TRUE and T will be three different factors. The easiest way to manage this is to look at the levels for the factor and replace values with a messy factor to a clean one. For example, the following code cleans up values in true/false values in column X in a data set called df:

df <- df %>% mutate(X = fct_recode(X, "TRUE" = "T", "TRUE" = "true", "FALSE" = "f", "FALSE" = "N", "FALSE" = "No"))Example 12.1 (Clean up the levels on the Messy IMDB 5000 dataset) We will use a slightly “messied” version of the IMDB 5000 Dataset, collected by chuansun76 on Kaggle.12

- Download the csv file of the IMDB 5000 dataset from “https://bcheggeseth.github.io/112_fall_2023/data/imdb_5000_messy.csv” (right-click, save file as), put it in your

Assignment_08folder, useread_csvto load it into RStudio, and save it asimdbMessyin R.

Solution

b. Print out the variable names.

Solution

## [1] "...1" "color" "director_name" "num_critic_for_reviews"

## [5] "duration" "director_facebook_likes" "actor_3_facebook_likes" "actor_2_name"

## [9] "actor_1_facebook_likes" "gross" "genres" "actor_1_name"

## [13] "movie_title" "num_voted_users" "cast_total_facebook_likes" "actor_3_name"

## [17] "facenumber_in_poster" "plot_keywords" "movie_imdb_link" "num_user_for_reviews"

## [21] "language" "country" "content_rating" "budget"

## [25] "title_year" "actor_2_facebook_likes" "imdb_score" "aspect_ratio"

## [29] "movie_facebook_likes"## [1] "actor_1_facebook_likes" "actor_1_name" "actor_2_facebook_likes" "actor_2_name"

## [5] "actor_3_facebook_likes" "actor_3_name" "aspect_ratio" "budget"

## [9] "cast_total_facebook_likes" "color" "content_rating" "country"

## [13] "director_facebook_likes" "director_name" "duration" "facenumber_in_poster"

## [17] "genres" "gross" "imdb_score" "language"

## [21] "movie_facebook_likes" "movie_imdb_link" "movie_title" "num_critic_for_reviews"

## [25] "num_user_for_reviews" "num_voted_users" "plot_keywords" "title_year"c. Examine the color variable. What are the existing values?

Solution

## # A tibble: 6 × 1

## color

## <chr>

## 1 Color

## 2 Color

## 3 Color

## 4 Color

## 5 <NA>

## 6 Color## [1] "B&W" "Black and White" "color" "Color" "COLOR"## [1] "Color" NA "Black and White" "COLOR" "color" "B&W"- How often does each color occur? Hint:

tableorcount(which is a short hand for agroup_by/summarize(n=n()))

Solution

## # A tibble: 6 × 2

## color n

## <chr> <int>

## 1 B&W 10

## 2 Black and White 199

## 3 COLOR 30

## 4 Color 4755

## 5 color 30

## 6 <NA> 19##

## B&W Black and White color Color COLOR

## 10 199 30 4755 30- The

read_csvread in thecolorvalues as strings. For this exercise, let’s convert them to factor using the code:imdbMessy <- imdbMessy %>% mutate(color = factor(color)).

- Select what you think is the best value for each level and replace “messy” versions of the value with clean ones with the

fct_recodefunction as shown above. How many entries are there for each level now?

Solution

imdbMessy <- imdbMessy %>% mutate(color = fct_recode(color, "B&W" = "Black and White", "Color" = "color", "Color" = "COLOR"))

imdbMessy %>% count(color)## # A tibble: 3 × 2

## color n

## <fct> <int>

## 1 B&W 209

## 2 Color 4815

## 3 <NA> 19Addressing Missing Data

Finally, you should look for and address missing data, encoded as NA (not available) in R. There is no single formula for dealing with NAs. You should first look to see how many NAs appear in each column:

Study the individual observations with NAs carefully. Why do you think they are missing? Are certain types of observations more likely to have NAs?

You have several options for dealing with NAs (and they have different consequences):

- You can remove observations with one or more NAs (see

drop_na). - You can remove columns with many NA values.

- You can replace NAs with a reasonable value (called imputing values). This could be a default value (like zero), or the average for a column. (see

replace_na) - You can use packages such as

missForestthat fill in missing values with statistical predictions.13

There is no perfect approach to dealing with NAs, and you must think carefully about how removing or replacing missing data may affect your work.

Example 12.2 (Address NA values in the Messy IMDB 5000 dataset) Consider imdbMessy.

- Print out the number of NAs in each of the columns.

Solution

## ...1 color director_name num_critic_for_reviews

## 0 19 104 50

## duration director_facebook_likes actor_3_facebook_likes actor_2_name

## 15 104 23 13

## actor_1_facebook_likes gross genres actor_1_name

## 7 884 0 7

## movie_title num_voted_users cast_total_facebook_likes actor_3_name

## 0 0 0 23

## facenumber_in_poster plot_keywords movie_imdb_link num_user_for_reviews

## 13 153 0 21

## language country content_rating budget

## 12 5 303 492

## title_year actor_2_facebook_likes imdb_score aspect_ratio

## 108 13 0 329

## movie_facebook_likes

## 0- Consider the

actor_1_facebook_likescolumn. Take a look at a few of the records that have NA values. Why do you think there are NAs?

Solution

This variable is missing if actor_1_name is missing, which suggests that this movie doesn’t have an actor 1 listed.

## # A tibble: 6 × 29

## ...1 color director_name num_critic_for_reviews duration director_facebook_likes actor_3_facebook_likes

## <dbl> <fct> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 4503 Color Léa Pool 23 97 4 NA

## 2 4520 Color Harry Gantz 12 105 0 NA

## 3 4721 Color U. Roberto Romano 3 80 6 NA

## 4 4838 Color Pan Nalin 15 102 95 NA

## 5 4946 Color Amal Al-Agroobi NA 62 58 NA

## 6 4947 Color Andrew Berends 12 90 0 NA

## # ℹ 22 more variables: actor_2_name <chr>, actor_1_facebook_likes <dbl>, gross <dbl>, genres <chr>,

## # actor_1_name <chr>, movie_title <chr>, num_voted_users <dbl>, cast_total_facebook_likes <dbl>,

## # actor_3_name <chr>, facenumber_in_poster <dbl>, plot_keywords <chr>, movie_imdb_link <chr>,

## # num_user_for_reviews <dbl>, language <chr>, country <chr>, content_rating <chr>, budget <dbl>, title_year <dbl>,

## # actor_2_facebook_likes <dbl>, imdb_score <dbl>, aspect_ratio <dbl>, movie_facebook_likes <dbl>- Create a new dataframe that removes observations that have NAs for

actor_1_facebook_likes.

Solution

- Create a second new data frame that replaces NAs in

actor_1_facebook_likeswith 0.

Additional Practice

Exercise 12.1 Find a dataset that is not built into R and is related to one of the following topics:

- A personal hobby or passion

- Your hometown, or a place you have lived

Load the data into R, make sure it is clean, and construct one interesting visualization of the data and include alt text.