Cross-Validation

As we gather

- Sit in the randomly assigned groups from Thursday.

- Check in with each other as humans.

Announcements

- Thursday at 11:15am - MSCS Coffee Break

- Smail Gallery

- You should have been notified of a “Stat 253 Feedback” Google Sheet shared with you

- Let me know if you didn’t. This is where your individual feedback will be.

- I’ll send regular reminders to check this sheet.

- Prepare to take notes.

- Locate the Rmd for today’s activity in the Schedule of the course website (see bottom of slides for url).

- Save this Rmd in the “STAT 253 > Notes” folder.

Notes - Cross-Validation

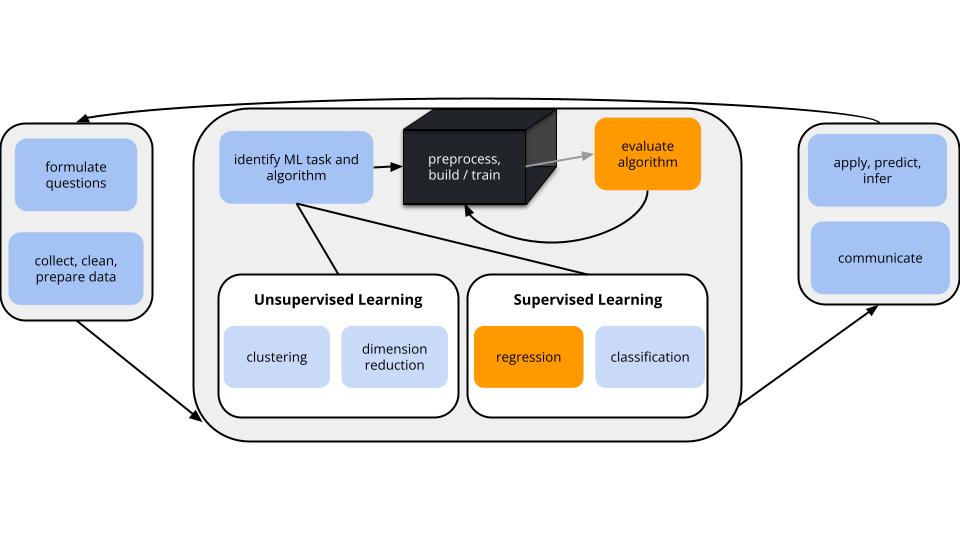

CONTEXT

world = supervised learning

We want to build a model some output variable \(y\) by some predictors \(x\).task = regression

\(y\) is quantitativemodel = linear regression model via least squares algorithm

We’ll assume that the relationship between \(y\) and \(x\) can be represented by\[y = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + ... + \beta_p x_p + \varepsilon\]

Notes - Cross-Validation

GOAL: model evaluation

We want more honest metrics of prediction quality that

- assess how well our model predicts new outcomes; and

- help prevent overfitting.

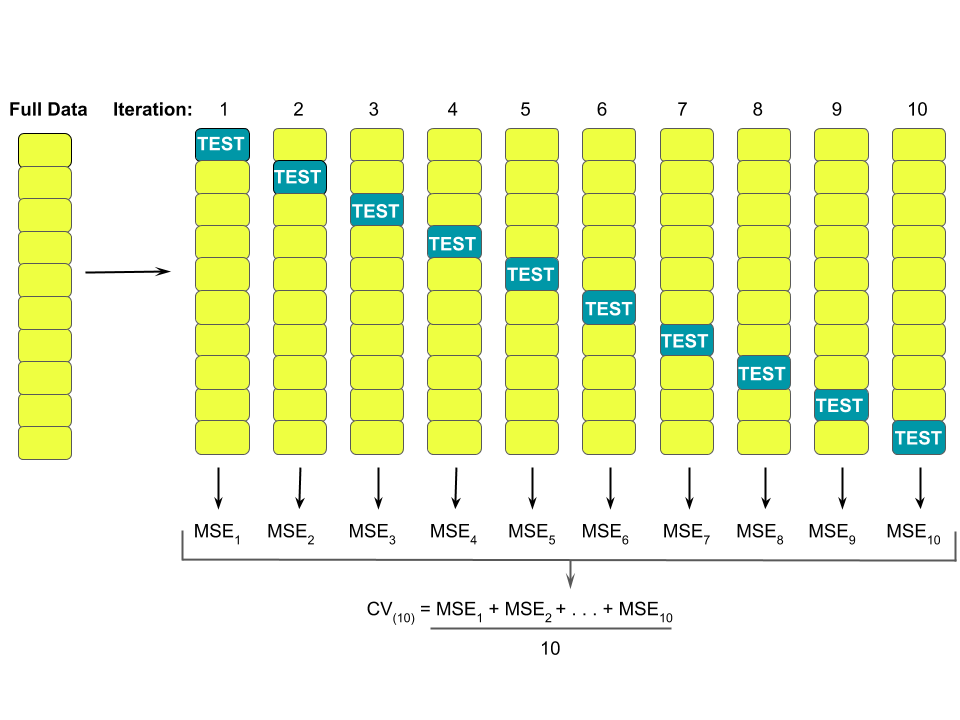

Notes - k-fold Cross-Validation

We can use k-fold cross-validation to estimate the typical error in our model predictions for new data:

- Divide the data into \(k\) folds (or groups) of approximately equal size.

- Repeat the following procedures for each fold \(j = 1,2,...,k\):

- Remove fold \(j\) from the data set.

- Fit a model using the data in the other \(k-1\) folds (training).

- Use this model to predict the responses for the \(n_j\) cases in fold \(j\): \(\hat{y}_1, ..., \hat{y}_{n_j}\).

- Calculate the MAE for fold \(j\) (testing): \(\text{MAE}_j = \frac{1}{n_j}\sum_{i=1}^{n_j} |y_i - \hat{y}_i|\).

- Remove fold \(j\) from the data set.

- Combine this information into one measure of model quality: \[\text{CV}_{(k)} = \frac{1}{k} \sum_{j=1}^k \text{MAE}_j\]

Definitions

algorithm = a step-by-step procedure for solving a problem (Merriam-Webster)

tuning parameter = a parameter or quantity upon which an algorithm depends, that must be selected or tuned to “optimize” the algorithm

Group Discussion

Go to https://bcheggeseth.github.io/253_spring_2024/cross-validation.html

Go to > Small Group Discussion: Algorithms and Tuning.

- Algorithms

Why is \(k\)-fold cross-validation an algorithm?

What is the tuning parameter of this algorithm and what values can this take?

- Tuning the k-fold Cross-Validation algorithm

Let’s explore k-fold cross-validation with some personal experience. Our class has a representative sample of cards from a non-traditional population (no “face cards”, not equal numbers, etc). We want to use these to predict whether a new card will be odd or even (a classification task).

- Based on all of our cards, do we predict the next card will be odd or even?

- You’ve been split into 2 groups. Use 2-fold cross-validation to estimate the possible error of using our sample of cards to predict whether a new card will be odd or even. How’s this different than validation?

- Repeat for 3-fold cross-validation. Why might this be better than 2-fold cross-validation?

- Repeat for LOOCV, i.e. n-fold cross-validation where n is the number of students in this room. Why might this be worse than 3-fold cross-validation?

- What value of k do you think practitioners typically use?

- R Code Preview

We’ve been doing a 2-step process to build linear regression models using the tidymodels package:

For k-fold cross-validation, we can tweak STEP 2.

- Discuss the code below and why we need to set the seed.

Notes - R code

Suppose we wish to build and evaluate a linear regression model of y vs x1 and x2 using our sample_data.

Obtain k-fold cross-validated estimates of MAE and \(R^2\)

# model specification

lm_spec <- linear_reg() %>%

set_mode("regression") %>%

set_engine("lm")

# k-fold cross-validation

# For "v", put your number of folds k

set.seed(___)

model_cv <- lm_spec %>%

fit_resamples(

y ~ x1 + x2,

resamples = vfold_cv(sample_data, v = ___),

metrics = metric_set(mae, rsq)

)Notes - R code

Obtain the cross-validated metrics

Details: get the MAE and R-squared for each test fold

In-Class Activity

Go back to https://bcheggeseth.github.io/253_spring_2024/schedule.html

Open Rmd file.

- Work through the exercises implementing CV with

humansdata. - Same directions as before

- Be kind to yourself

- Be kind to each other & collaborate

- Ask me questions as I move around the room.

After Class

Finishing the activity

- This is the end of Unit 1, so there are reflection questions at the bottom to help you organize the concepts in your mind. Use the concept map linked in Q9!

- If you didn’t finish the activity, no problem! Be sure to complete the activity outside of class, review the solutions in the course site, and ask any questions on Slack or in office hours.

- Re-organize and review your notes to help deepen your understanding, solidify your learning, and make homework go more smoothly!

An R code video, posted on today’s section on Moodle, talks through the new code. This video is OPTIONAL. Decide what’s right for you.

Continue to check in on Slack. I’ll be posting announcements there from now on.

Upcoming due dates

- Thursday, 10 minutes before your section: Checkpoint 4 on some new R code.

- Thursday 2/1: Homework 2

- Start today if you haven’t already

- Using Slack, invite others to work on homework with you.

- Pass/fail, limited revisions,

- Deadline is so we can get timely feedback to you, let me know if you cannot make deadline (let me know what day you’ll finish)

- Start today if you haven’t already