5.2 Autocorrelation Function

As we discussed in the last chapter, the key feature of correlated data is the covariance and correlation of the data. For time series, we have decomposed the data into trend, seasonality, and error. It is the dependence in the errors that we will now work to model.

Remember: For a stationary random process \(X_t\) (constant mean, constant variance), the autocovariance function is only dependent on the different in time, which we will refer to as the lag \(k\), so

\[\Sigma(k) = Cov(X_t, X_{t+k}) = E[(X_t - \mu_t)(X_{t+k} - \mu_{t+k})] \] for any time \(t\).

Most time series are not stationary in that they do not have a constant mean across time. By removing the trend and seasonality, we attempt to get errors (residuals) that have a constant mean around 0. We’ll discuss the constant variance bit in a second.

If we assume that the residuals are a stationary process, we can estimate the autocovariance function with the sample autocovariance function (ACVF),

\[c_k = \frac{1}{n}\sum^{n-|k|}_{t=1}(x_t - \bar{x})(x_{t+|k|} - \bar{x})\] where \(\bar{x}\) is the sample mean, averaged across time because we are assuming it is constant.

For any sequence of observations, \(x_1,...,x_n\),

- \(c_k = c_{-k}\)

- \(c_0\geq 0\) and \(|c_{k}| \leq c_{0}\)

The sample autocorrelation function (ACF) is the covariance divided by the variance, the covariance for lag 0,

\[r_k = \frac{c_k}{c_0} \] For a non-stationary series with a trend and seasonality, we can expect to see fairly high correlation between observations with large lags. This high correlation pattern is typically indicative that you need to go deal with trend and seasonality.

acf(birth)

We would like see the autocorrelation of the random errors, after removing the trend and the seasonality. Let’s look at the sample autocorrelation of the residuals from the model with a moving average filter estimated trend and monthly averages to account for seasonality.

birthTS %>%

dplyr::select(ResidualTS) %>%

dplyr::filter(complete.cases(.)) %>%

acf()

The autocorrelation has to be 1 for lag 0 because \(r_0 = c_0/c_0 = 1\). Note that the lags are in terms of months here because we did not specify a ts() object. We see the autocorrelation decreasing to close to zero but cycling up and down around zero.

What about the ACF of birth data after differencing?

acf(diff(birth, 1))

acf(diff(diff(birth,1), lag = 12, 1))

Note that the lags here are in terms of years (Lag = 1 on the plot refers to \(k\) = 12) because the data is saved as a ts() obejct. In the first plot (after only first differencing), we see an autocorrelation of about 0.5 for observations that are 1 year apart. This suggests that there may still be some seasonality to be accounted for. In the second plot after we also did seasonal differencing for lag = 12, that decreases a bit (and actually becomes a bit negative).

Now, do you notice the blue, dashed horizontal lines?

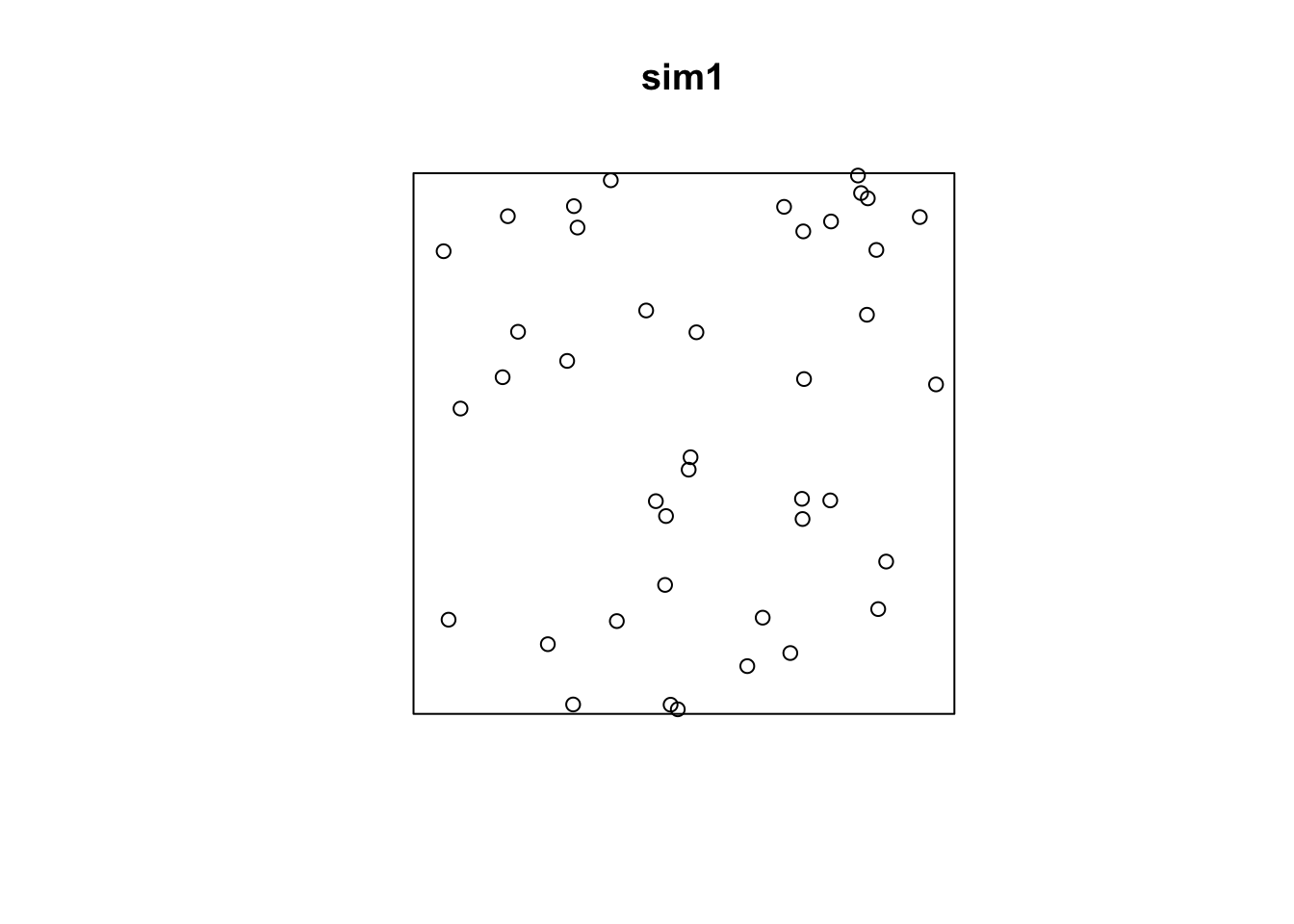

These are guidelines for us. If the random process is white noise such that the observations are independent and identically distributed with constant mean (of 0) and constant variance \(\sigma^2\), we’d expected the sample autocorrelation to be within these dashed horizontal lines (\(\pm 1.96/\sqrt{n}\)) roughly 95% of the time. See an example of Gaussian white noise below and its sample autocorrelation function.

y <- ts(rnorm(500))

plot(y)

acf(y)

Many stationary time series have recognizable ACF patterns. We’ll get familiar with those in a moment.

Most time series that we encounter in practice, however, are not stationary. Thus, our first step will always be to deal with the trend and seasonality. Then we can model the residuals with a stationary model.